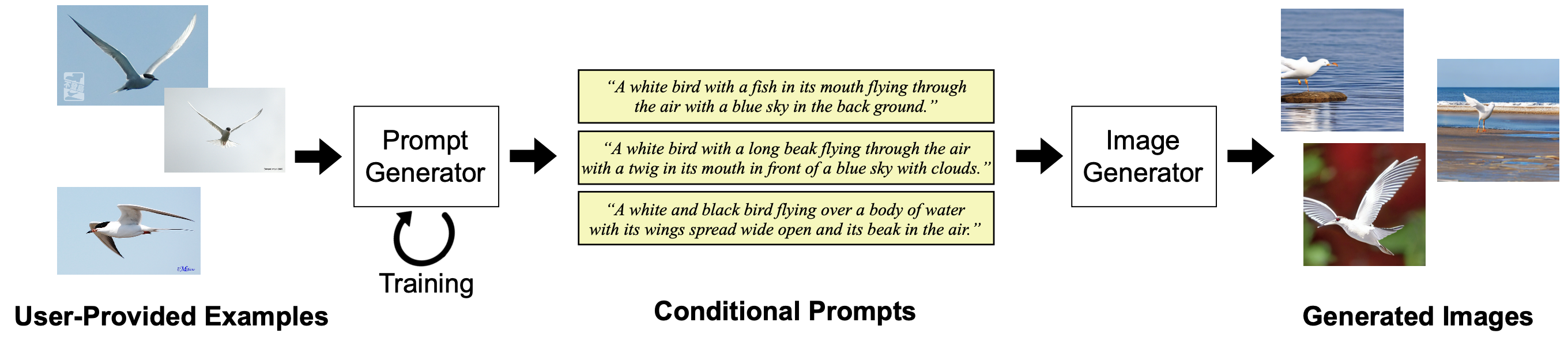

Example-based Conditioning for Text-to-Image Generative Models

Recent progress in image generation has made it possible to create high-quality images. Techniques using diffusion models have shown great potential in producing high-quality images from simple prompts, attracting inexperienced beginners in deep learning. However, prompt-based image generation often produces images that do not match the user’s expectations. Merely describing an image might not yield the desired result, and users might struggle to describe what they want accurately. This study introduces a new paradigm of example-based conditioning for interactive image generation to address this useability challenge. Our method presents users with a selection of images, allowing them to define categories by choosing examples that match their objectives. These examples serve as training data for our system to learn to generate prompts conditioned on the categories, thereby creating a variety of images according to the category requirements. Through user experiments, we demonstrate the advantages of our approach compared to the prompt-based baseline.