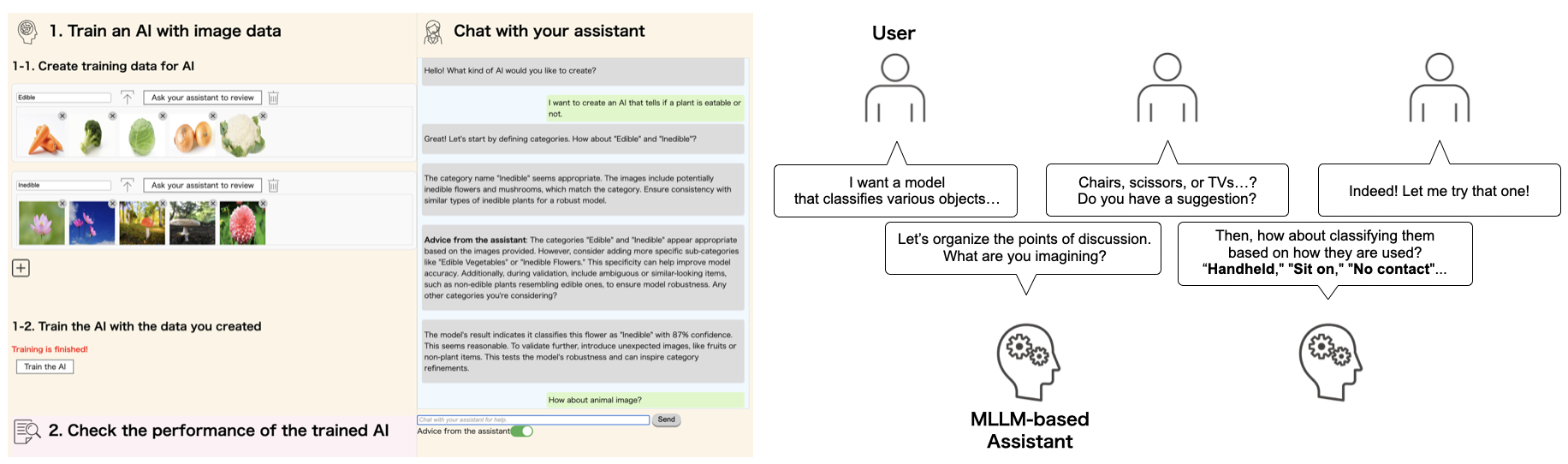

DuetML: Human-LLM Collaborative Machine Learning Framework for Non-Expert Users

Machine learning (ML) models have significantly impacted various domains in our everyday lives. While large language models (LLMs) offer intuitive interfaces and versatility, task-specific ML models remain valuable for their efficiency and focused performance in specialized tasks. However, developing these models requires technical expertise, making it particularly challenging for non-expert users to customize them for their unique needs. Although interactive machine learning (IML) aims to democratize ML development through user-friendly interfaces, users struggle to translate their requirements into appropriate ML tasks. We propose human-LLM collaborative ML as a new paradigm bridging human-driven IML and machine-driven LLM approaches. To realize this vision, we introduce \systemname, a framework that integrates multimodal LLMs (MLLMs) as interactive agents collaborating with users throughout the ML process. Our system carefully balances MLLM capabilities with user agency by implementing both reactive and proactive interactions between users and MLLM agents. Through a comparative user study, we demonstrate that \systemname enables non-expert users to define training data that better aligns with target tasks without increasing cognitive load, while offering opportunities for deeper engagement with ML task formulation.